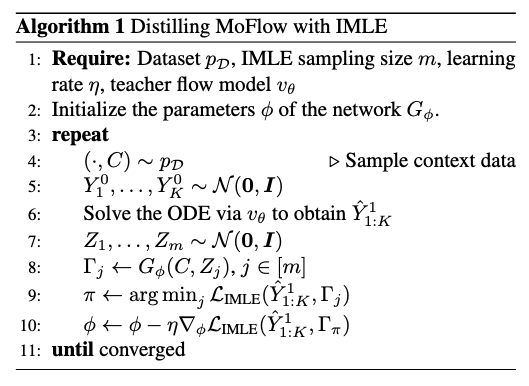

The IMLE distillation process is outlined in Algorithm 1.

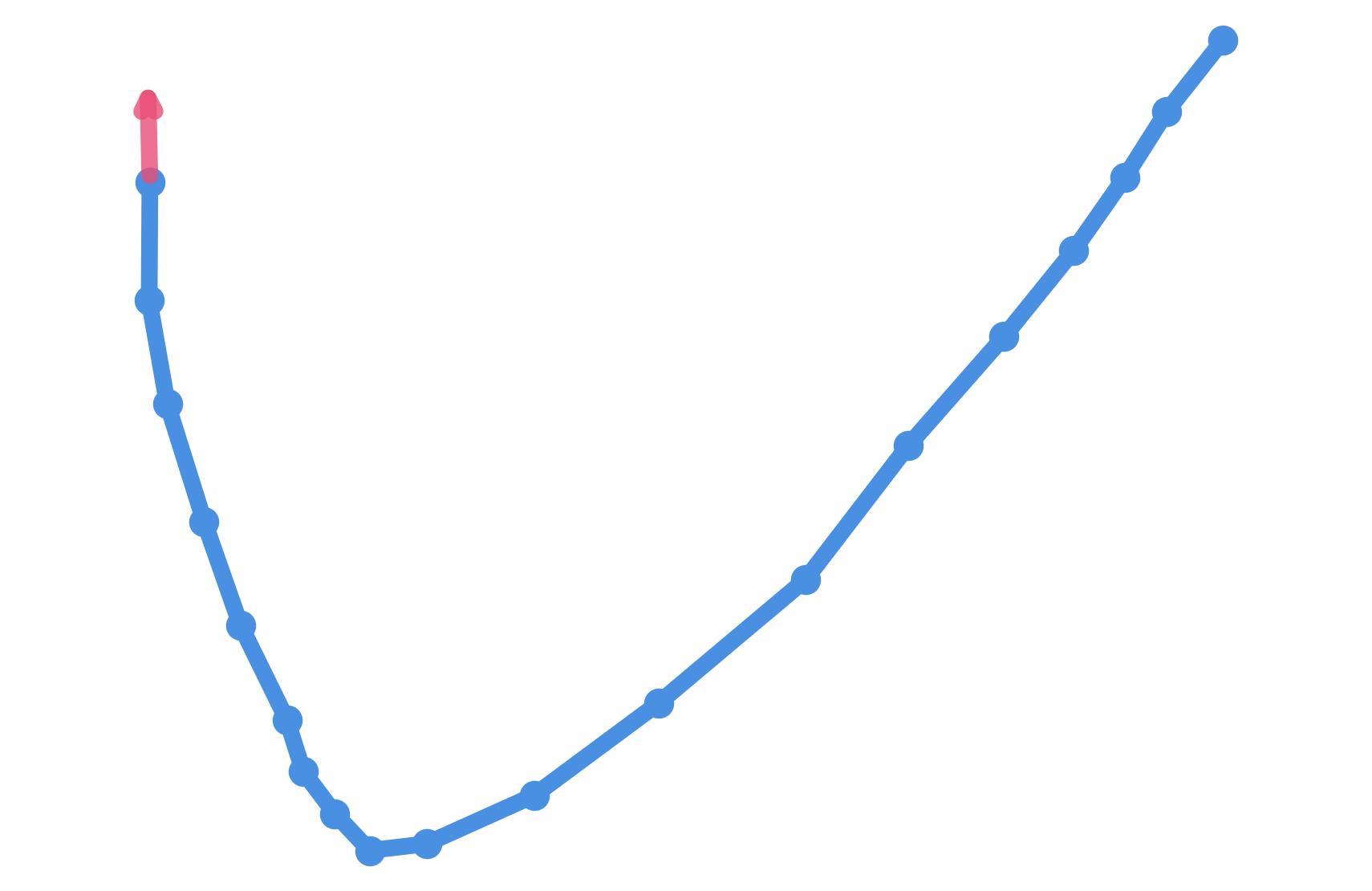

Specifically, lines 4–6 describe the standard ODE-based sampling of the teacher model, MoFlow. This produces \( K \) correlated multi-modal trajectory predictions

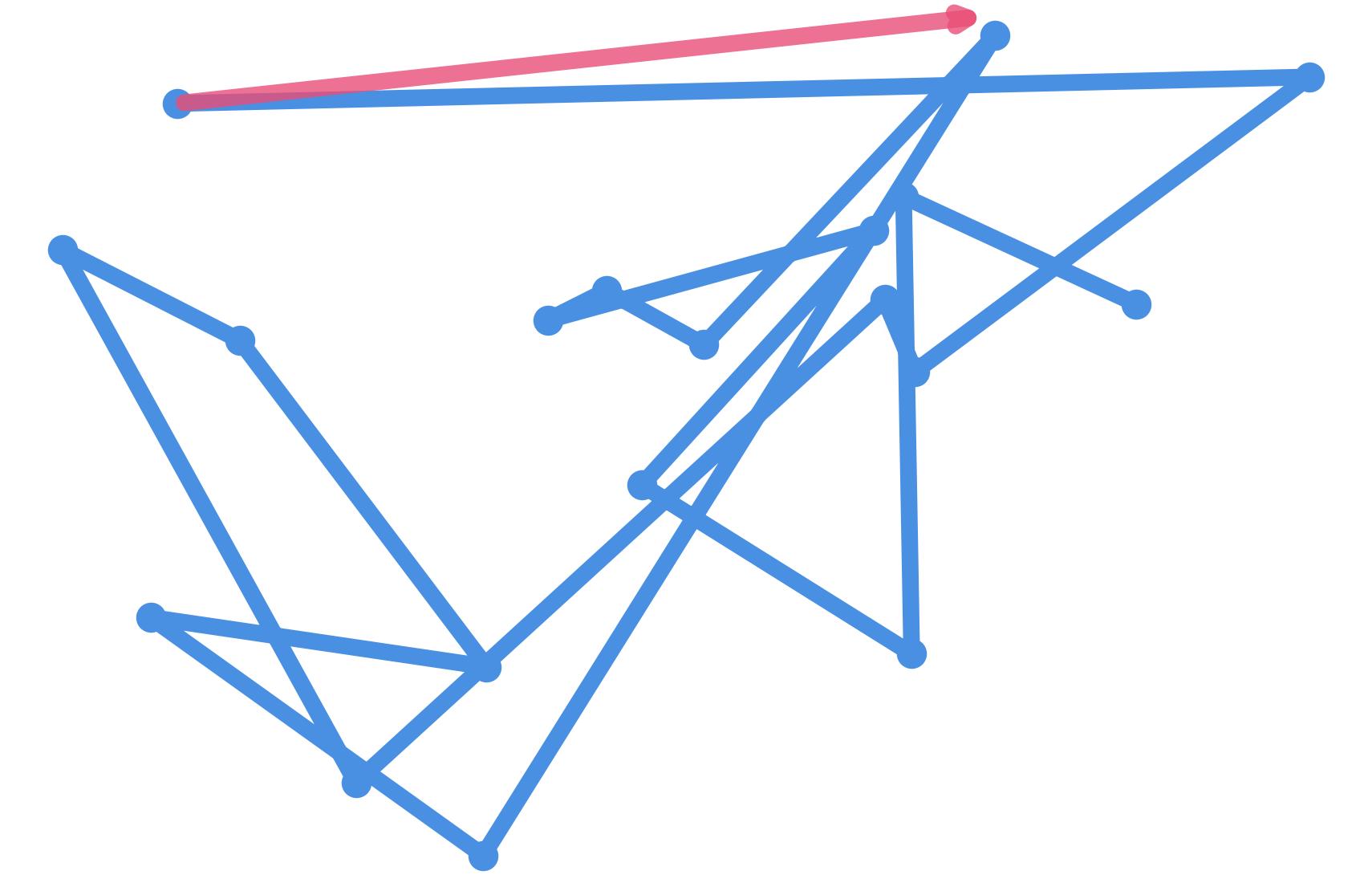

\( \hat{Y}^1_{1:K} \) conditioned on the context \( C \). A conditional IMLE generator \( G_\phi \) then uses a noise

vector \( Z \) and context \( C \) to generate \( K \)-component trajectories

\( \Gamma \), matching the shape of \( \hat{Y}^1_{1:K} \).

The conditional IMLE objective generates more samples than those in the distillation dataset for the same context \( C \). Specifically, \( m \) i.i.d. samples are drawn via \( G_\phi \), and the one closest to the teacher prediction \( \hat{Y}^1_{1:K} \) is selected for loss computation. This minimizes the distance to the nearest student sample, ensuring the teacher model’s mode is well-approximated.

To preserve trajectory prediction multi-modality, we employ the Chamfer distance \(d_{\text{Chamfer}}(\hat{Y}^1,\Gamma)\) as our loss function

where \( \Gamma^{(i)} \in \mathbb{R}^{A \times 2T_f} \) is the \( i \)-th component of the IMLE-generated correlated trajectory.